简介

家中的集群是1.18.10的,最近想升级一下,所以记录下升级的过程

准备操作

首先下载kubeadm

我是直接下载二进制文件的,所有的kubeadm kubectl等都可以在下面找到

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.20.md

这次我准备直接升级到v1.20.4,但是要升级到v1.20.4要先升级到1.19.0之后才可以升级到v1.20.4

首先下载需要的二进制文件

mkdir 1.20.4 && cd 1.20.4

wget https://dl.k8s.io/v1.20.4/kubernetes-server-linux-amd64.tar.gz

mkdir v1.19.8 && cd v1.19.8

wget https://dl.k8s.io/v1.19.8/kubernetes-server-linux-amd64.tar.gz

之后解压

tar -zxvf kubernetes-server-linux-amd64.tar.gz

我觉得都学到这步了,应该不会出现下载不了的情况吧

开始升级第一个主节点kubeadm

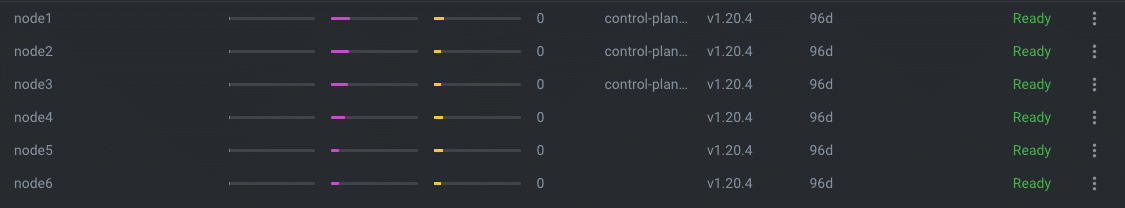

忘记介绍我家中k8s的节点情况了,一共6个节点3主3从,当然如果你想说是3个控制平面,3个工作节点也可以,工作节点是4h8g,主节点是4h8g跑在nuc上的虚拟机上

ip分配如下

- node1

10.10.100.21master - node2

10.10.100.22master - node3

10.10.100.23master - node4

10.10.100.31worker - node5

10.10.100.32worker - node6

10.10.100.33worker

首先备份node1的kubeadm

cp /usr/local/bin/kubeadm /usr/local/bin/kubeadm.bak

之后加入新的kubeadm

cp kubernetes/server/bin/kubeadm /usr/local/bin/kubeadm

验证版本

kubeadm version

如果是

kubeadm version: &version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.8", GitCommit:"fd5d41537aee486160ad9b5356a9d82363273721", GitTreeState:"clean", Builddate: "2021-02-17T12:39:33Z", GoVersion:"go1.15.8", Compiler:"gc", Platform:"linux/amd64"}:00

那就没什么问题了

下载镜像

这个是每个身处中国的运维必须要做的事情

首先查看需要什么镜像

kubeadm config images list

之后使用各种技巧下载镜像到每个节点上

这里我是直接设置代理的方式从另外一个机器上pull镜像然后分发到所有的节点上的,如何设置代理pull镜像可以看

准备镜像名字的文件

mkdir images && cd images

images.list

k8s.gcr.io/kube-apiserver:v1.19.8

k8s.gcr.io/kube-controller-manager:v1.19.8

k8s.gcr.io/kube-scheduler:v1.19.8

k8s.gcr.io/kube-proxy:v1.19.8

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

下载镜像

for line in `cat images.list`

do

docker pull $line

done

保存镜像为文件

for line in `cat images.list`

do

filename=`echo $line| awk -F "/" '{print $2}'`

docker save $line -o $filename

done

编写加载脚本

vim load.sh

for line in `cat images.list`; do filename=`echo $line| awk -F "/" '{print $2}'`; docker load -i $filename; done

压缩

cd .. && zip -r images.zip images

分发到各个节点,我是用的是ansible

hosts.ini

[k8s]

10.10.100.21

10.10.100.22

10.10.100.23

10.10.100.31

10.10.100.32

10.10.100.33

确认各个节点可以连接

ansible k8s -i hosts.ini -m ping

分发文件

ansible k8s -i hosts.ini -m copy -a "src=images.zip dest=/data"

解压

ansible k8s -i hosts.ini -m shell -a "cd /data && unzip images.zip"

导入镜像

ansible k8s -i hosts.ini -m shell -a "cd /data/images && sh load.sh"

删除镜像文件

ansible k8s -i hosts.ini -m shell -a "rm -rf /data/images*"

验证升级计划

这一步十分重要执行

kubeadm upgrade plan

如果出现

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.18.10

[upgrade/versions] kubeadm version: v1.19.8

I0222 15:33:01.322815 75081 version.go:255] remote version is much newer: v1.20.4; falling back to: stable-1.19

[upgrade/versions] Latest stable version: v1.19.8

[upgrade/versions] Latest stable version: v1.19.8

[upgrade/versions] Latest version in the v1.18 series: v1.18.16

[upgrade/versions] Latest version in the v1.18 series: v1.18.16

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

kubelet 6 x v1.18.10 v1.18.16

Upgrade to the latest version in the v1.18 series:

COMPONENT CURRENT AVAILABLE

kube-apiserver v1.18.10 v1.18.16

kube-controller-manager v1.18.10 v1.18.16

kube-scheduler v1.18.10 v1.18.16

kube-proxy v1.18.10 v1.18.16

CoreDNS 1.6.7 1.7.0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.18.16

_____________________________________________________________________

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

kubelet 6 x v1.18.10 v1.19.8

Upgrade to the latest stable version:

COMPONENT CURRENT AVAILABLE

kube-apiserver v1.18.10 v1.19.8

kube-controller-manager v1.18.10 v1.19.8

kube-scheduler v1.18.10 v1.19.8

kube-proxy v1.18.10 v1.19.8

CoreDNS 1.6.7 1.7.0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.19.8

_____________________________________________________________________

The table below shows the current state of component configs as understood by this version of kubeadm.

Configs that have a "yes" mark in the "MANUAL UPGRADE REQUIRED" column require manual config upgrade or

resetting to kubeadm defaults before a successful upgrade can be performed. The version to manually

upgrade to is denoted in the "PREFERRED VERSION" column.

API GROUP CURRENT VERSION PREFERRED VERSION MANUAL UPGRADE REQUIRED

kubeproxy.config.k8s.io v1alpha1 v1alpha1 no

kubelet.config.k8s.io v1beta1 v1beta1 no

_____________________________________________________________________

就表示是可以升级到v1.19.8这个版本的

升级node1

升级节点

kubeadm upgrade apply v1.19.8

如果看到

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.19.8". Enjoy!

那么就升级成功了

腾空node1

kubectl drain node1 --ignore-daemonsets

更新kubelet和kubectl

cp -f kubelet /usr/local/bin/ && cp -f kubectl /usr/local/bin/

之后重启kubelet

systemctl daemon-reload

systemctl restart kubelet

重新上线节点

kubectl uncordon node1

升级node2

node2的升级和node1差不多,但是值得注意的是使用

kubeadm upgrade node

就可以升级了不需要执行

kubeadm upgrade apply v1.19.8

步骤如下

- 升级kubeadm

- 执行

kubeadm upgrade node - 腾空节点

- 升级kubectl kubelet

- 重启kubelet

systemctl daemon-reload && systemctl restart kubelet - 解除节点保护,重新上线节点

升级工作节点

工作节点的升级和node2的升级一样,不过可以不需要升级kubectl

验证集群状态

使用

kubectl get nodes

就可以验证集群的工作状态,如果状态都是Ready那么就应该没什么问题了,之后看下pod的状态是不是都是正常的就可以了

升级到v1.20.4

升级到1.19.8之后就可以直接升级到1.20.4了步骤和上面一样

关于网络插件

我使用的是flannel,直接升级就好了

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

其他

默认比如api-server的配置都是在/etc/kubernetes/tmp这个文件夹里面,如果升级之后发现配置变了可以从这个文件夹找到老的配置来修改

欢迎关注我的博客www.bboy.app

Have Fun