Introduction

In our daily work, we might need to use various AI services, such as Github Copilot, Azure OpenAI, and Groq. However, constantly switching between these platforms can complicate our tasks. Therefore, we can consider building an AI gateway to integrate these services and improve work efficiency. Here are some related projects I found that can serve as references:

Architecture

Our goal is to build a simple and effective architecture. In this architecture, ChatGPT-Next-Web serves as the user interface, while one-api acts as the AI gateway, responsible for distributing requests to various AI services.

Setting Up ChatGPT-Next-Web

I chose to deploy ChatGPT-Next-Web on Vercel and use Cloudflare as the CDN. If you wish to use Docker Compose for deployment, you can refer to the following configuration file:

version: "3.9"

services:

chatgpt-next-web:

container_name: chatgpt-next-web

image: yidadaa/chatgpt-next-web:v2.11.3

restart: "always"

volumes:

- "/etc/localtime:/etc/localtime"

ports:

- 3000:3000

environment:

- CUSTOM_MODELS=-all,+gpt-3.5-turbo,+gpt-4,+mixtral-8x7b-32768,+llama2-70b-4096

- BASE_URL=xxxxxxxxxxxxxx

- CODE=xxxxxxxxxxxxxxxxxx

- OPENAI_API_KEY=xxxxxxxxxxxxxxxxxx

Environment variable explanation:

BASE_URL: The address of one-apiCODE: The password to access ChatGPT-Next-WebOPENAI_API_KEY: The token in one-apiCUSTOM_MODELS: I only listed two models supported by Groq and two GPT models as these are the models I most commonly use.

Setting Up one-api

I chose to deploy one-api in Kubernetes (k8s). You can refer to the following StatefulSet configuration:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: one-api

namespace: app

spec:

selector:

matchLabels:

app: one-api

serviceName: one-api

replicas: 1

template:

metadata:

labels:

app: one-api

spec:

containers:

- name: one-api

image: justsong/one-api:v0.6.1

ports:

- containerPort: 3000

name: one-api

env:

- name: TZ

value: Asia/Shanghai

- name: SQL_DSN

value: root:xxxxxxxx@tcp(mysql:3306)/one-api # MySQL address

- name: SESSION_SECRET

value: xxxxxxx # Set a random string arbitrarily

args:

- "--log-dir"

- "/app/logs"

volumeMounts:

- name: one-api-data

mountPath: /data

subPath: data

- name: one-api-data

mountPath: /app/logs

subPath: logs

- name: timezone

mountPath: /etc/localtime

readOnly: true

volumes:

- name: timezone

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

volumeClaimTemplates:

- metadata:

name: one-api-data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 50Gi

Integrating Azure OpenAI

Although one-api itself supports Azure OpenAI, I kept receiving 404 errors in my tests. Therefore, I added a layer of forwarding behind one-api. You can refer to my project ai-gateway. Just build an image using Docker and then add a few environment variables. When adding a channel, select a custom channel, the Base URL is your container address, and the key can be set arbitrarily.

Integrating Github Copilot

You can refer to copilot-gpt4-service to integrate Github Copilot. Here is my configuration example:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: copilot-gpt4-service

namespace: app

spec:

selector:

matchLabels:

app: copilot-gpt4-service

serviceName: copilot-gpt4-service

replicas: 1

template:

metadata:

labels:

app: copilot-gpt4-service

spec:

containers:

- name: copilot-gpt4-service

image: aaamoon/copilot-gpt4-service:0.2.0

ports:

- containerPort: 8080

name: copilot

env:

- name: TZ

value: "Asia/Shanghai"

- name: CACHE_PATH

value: "/db/cache.sqlite3"

volumeMounts:

- name: copilot-gpt4-service-data

mountPath: /db

- name: timezone

mountPath: /etc/localtime

readOnly: true

volumes:

- name: timezone

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

volumeClaimTemplates:

- metadata:

name: copilot-gpt4-service-data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 50Gi

After deploying the container, use the following command to get the token:

python3 <(curl -fsSL https://raw.githubusercontent.com/aaamoon/copilot-gpt4-service/master/shells/get_copilot_token.py)

When adding a channel, choose a custom channel, the address is your container address, and the key is the token you just got. It currently only supports the GPT-4 and GPT-3.5-turbo models, but it is faster than Azure OpenAI.

Integrating Groq

To integrate Groq, you also need to choose a custom channel. The address is Groq’s address, and the key is Groq’s key.

Others

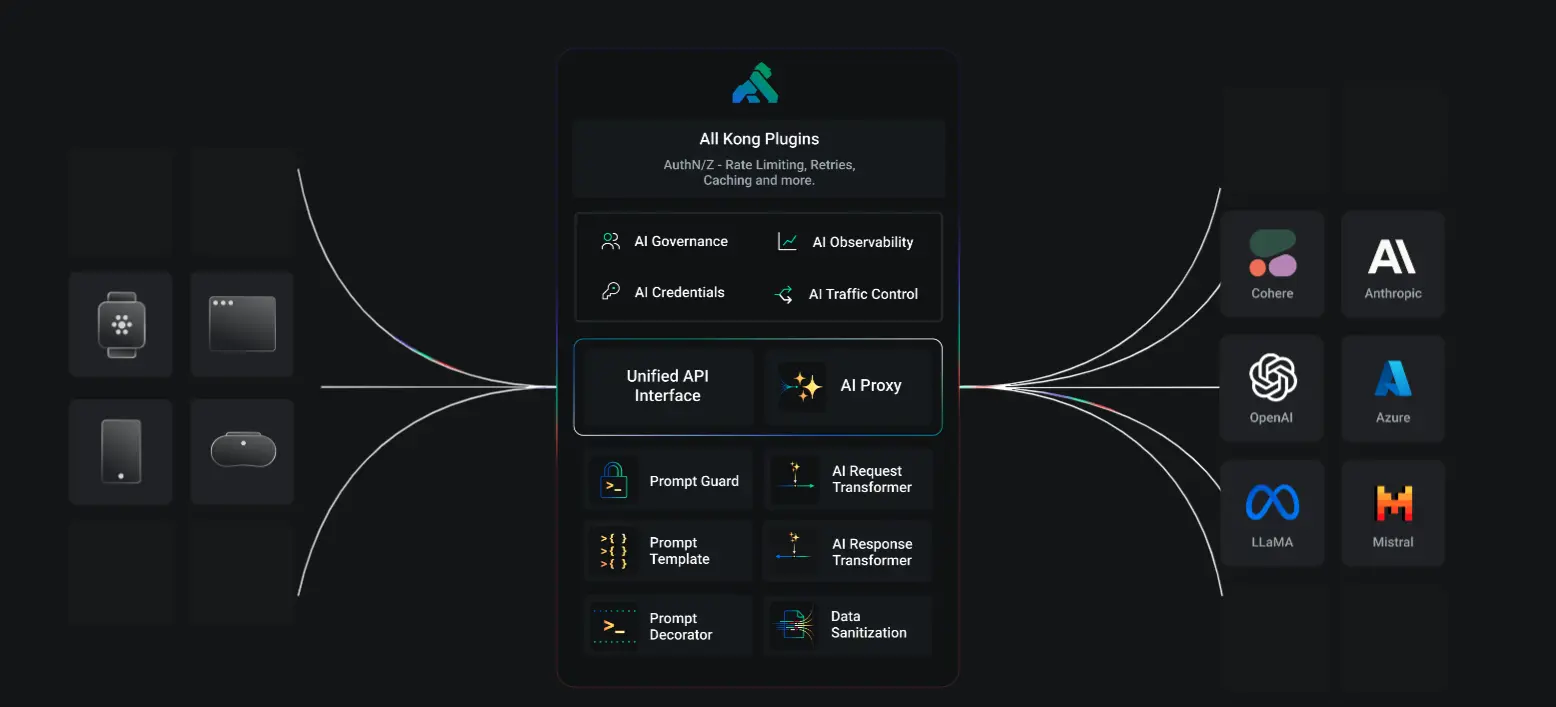

Besides one-api, there are other AI gateways to choose from, such as Kong and Cloudflare. Cloudflare’s AI gateway supports caching, logging, and rate limiting, which are very practical for some enterprise scenarios.

Feel free to follow my blog at www.bboy.app

Have Fun